The early days of research on Artificial Intelligence, Frank Rosenblatt, a scientist at Cornell University in the United States, invented what he called the [perceptron] . The perceptron was an algorithm designed to classify objects it was shown and an ancestor of modern [Artificial Intelligence] . When [Rosenblatt] became a little boastful at a press conference in 1958, the New York Times picked up on it and went a little overboard with excitement. “NEW NAVY DEVICE LEARNS BY DOING; [Psychologist] Shows Embryo of Computer Designed to Read and Grow Wiser”, read the title of an article. And the author went on:

[perceptron] In machine learning, perceptron is an algorithm for supervised learning of binary classifiers. A binary classifier is a function which can decide whether or not an input, represented by a vector of numbers, belongs to some specific class.

[Artificial Intelligence]

[Rosenblatt] I hope there are no problems anymore

[Psychologist] ?

The Navy said the perceptron would be the first non-living mechanism “capable of receiving, recognizing and identifying its surroundings without any human training or control”

Does this tone sound familiar?

Not a week goes by without news of new breakthroughs in Artificial Intelligence and of algorithms being able to complete tasks which were previously reserved to humans. There is plenty of talk these days about the automation of jobs and our new algorithmic overlords which get complicated tasks done with seemingly very little human involvement. This, however, is a fallacy — that we cannot see the humans behind AI does not mean that there are none. And this does not only apply to the engineers developing these algorithms. In fact, there is a new class of blue-collar jobs curating the data which is so critical to the functioning of the algorithms which are said to power our digitalized economy.

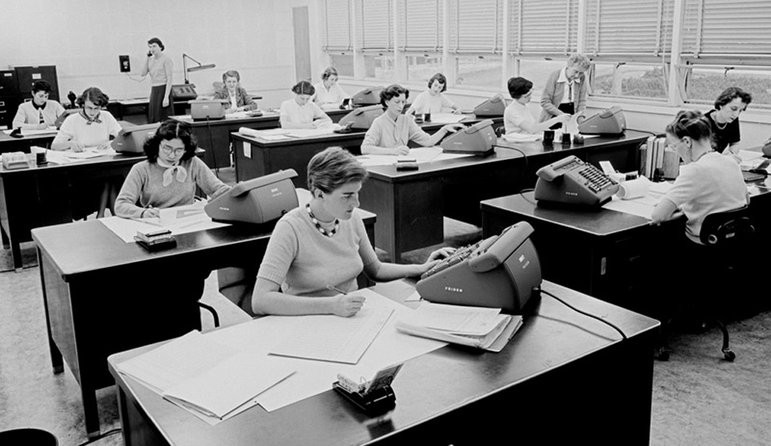

It would not be the first time that we overlook the masses of people working hard to make technology work. Not much time has passed since “computers” were actually people, a substantial share of whom (underpaid) women, working on computations which have since been automated. Women were also among the first computer programmers, operating complex machines such as the one built to run Rosenblatt’s perceptron algorithm, yet overshadowed by the engineers of these first computers. Little attention was paid to these backroom workers of big technology firms, government agencies, and research labs at the time. Only recently, there has been an uptick in recognition of these workers, in academia but also, for example, in Hollywood. We should learn from history and not repeat these mistakes. To do so, we need to take a look at who the new hidden figures of technology are, what their job is, and why they are needed.

When people talk about AI these days, what they really mean is usually Machine Learning (ML). Most ML algorithms, in turn, are essentially statistical models which “learn” how to perform a particular task by analyzing large samples of data — “training data” — they were previously fed. Developers rely on these models because of what is referred to as [Polanyis Paradox] : We know more than we can tell, that is. Much of our knowledge is tacit, which is why we can’t simply program it into software in the form of hard rules; no matter how trivial the task, to teach computer programs we need to show, or “train”, them. Thus, a very sophisticated, but untrained ML algorithm is like a sports car without wheels: it may still look nice, but it won’t get you anywhere — it’s essentially useless. Similarly, if you feed your ML model garbage training data, it will spit out garbage results. But what exactly is “training data”? Let’s say you run a blueberry muffin factory, but every once in a while a runaway dog from a nearby animal shelter accidentally jumps onto the conveyer built and your AI-powered packaging robot needs to differentiate between the muffins and the dogs so that no dog ends up on a grocery store shelf. For the robot to pull this off, it needs to be fed with a lot of pictures of muffins and dogs, and they need to be labeled accordingly so it can deduce their identifying characteristics. The same principle applies, for example, to self-driving cars (which need to be able to identify a stop sign, amongst other things) and most other AI applications.

[Polanyis Paradox] Summarised in the slogan "We can know more than we can tell", Polanyi’s Paradox is mainly to explain the cognitive phenomenon that there exist many tasks which we, human beings, understand intuitively (tacit knowledge) how to perform but cannot verbalise the rules or procedures behind it.

This poses a problem for companies: How do they obtain labeled or annotated data? Even if they get their hands on large troves of data — such as photos (for image recognition algorithms), voice recordings (for speech recognition), or written text (for sentiment analysis) — labeling all this data is a tedious task, and one which needs to be completed by humans. It is work.

50 ways to label data

There are different ways to get your data labeled. Some firms label their data themselves — although this can be costly, as hiring people simply for these tasks costs firms both money and flexibility. Other companies even find ways to get people to label their data for free. Ever wonder why Google’s reCAPTCHA keeps asking you to identify traffic signs on blurry photos? (A small hint: Google’s holding company Alphabet also owns Waymo, which is at the forefront of autonomous driving) In most cases, however, it is paid workers who label and curate data and an entire outsourcing industry has sprung up around it. Be it in factory-like workplaces around the world or through remote work at home or on a smartphone: These are the invisible workers who power Artificial Intelligence.

Much like Western firms started offshoring manufacturing jobs to developing countries starting in the 1960s and 1970s, tech companies are outsourcing data labeling to foreign companies running what can be described as data labeling factories. And much like in the past, these jobs are moved to places — from China to Central Africa — where wages are low and working conditions more favorable for them. There, masses of workers in former warehouses and large open space offices sit in front of computers and spend their workdays labeling data. As Li Yuan quotes the co-founder of a Chinese data labeling company in a recent piece for the New York Times:

We’re the construction workers in the digital world. Our job is to lay one brick after another […] But we play an important role in A.I. Without us, they can’t build the skyscrapers.

Another way to outsource data labeling is through online [crowdworking platforms] , relying on their users completing tasks broken down into small components all around the world. This includes large platforms such as Amazon’s Mechanical Turk with its hundreds of thousands of registered crowdworkers, but also specialized platforms. Some large technology companies even have their own crowdworking platforms to curate data, others can rely on smaller platform services that focus exclusively on data labeling. Workers’ motivations for these jobs vary: some people want to make an extra buck in their spare time and value the flexibility such platforms offer. As one user of Spare5, a specialized data labeling app, explains in a promotional video:

It’s just, you know, something I can easily do, just pull my phone out, do a few tasks, make a few dockets on the way home and to work. […] For me it’s a little bit rewarding knowing that I spent this time really digging deep, trying to find the information […] I feel like I am solving a mystery, solving this puzzle.

Others, however, rely on these platform jobs for their livelihood and are oftentimes faced with substantial risks: low pay, no (or few) employment protections and employee rights, and along with that enormous uncertainty.

The blue-collar job of the age of AI

Taking a step back, it becomes evident that a new type of low-skill, blue-collar job has emerged to satisfy technology’s appetite for labeled data. As opposed to doing physical assembly line work in the industrial economy, this new AI working class has become part of a digitalized “data supply chain”. Of course, not all of these jobs are low-skill — an algorithm detecting cancer on images from CT scans needs to be trained by experienced radiologists. But, in accordance with Polanyi’s paradox, most tasks researchers are trying to get ML applications to complete are still fairly simple for humans and training these algorithms requires little more than common sense.

It is thus important to make sure that this new class of jobs becomes a driver of economic security for workers, not a source of exploitation. As for supply chains in more “traditional” global industries, such as mining or the clothing industry, it is on all of us — governments, consumers, and firms — to ensure that those who label data work under decent conditions. It will be difficult for governments to regulate work in this global, borderless market for data labeling services. Yet, they must strive to adapt existing institutions aimed at improving working conditions and to push companies to create fair data supply chains. Both crowdwork and work in data labeling factories pose different challenges in this respect, but none of these obstacles are insurmountable. Overcoming them does, however, require regulatory efforts as well as international and cross-sectoral cooperation. Firms, in turn, must offer some transparency regarding their data supply chains. And although first-world consumers still remain largely oblivious of the conditions under which their clothes and gadgets are manufactured in far-away places, we, too, should seek to hold tech companies accountable for the way they power their artificially intelligent applications. After all, consumers do have leverage and responsible consumption can have an impact on firms’ behavior. No matter how excited we get about the pace of technological progress, it is as important now as it was in the past to keep reminding ourselves that there are people behind most of the advances in AI we hear about day in and day out in the media — many of them, actually. And as ML researchers are looking for an ever-growing number of new tasks to be automated, these jobs are not going to go away soon. Let’s make sure they are decent jobs then.

sources